In this article, I’m going to show you how you can manage your minty fresh AKS HCI clusters that have been deployed by PowerShell, from your Windows workstation. It will detail what you need to do to obtain the various config files required to manage the clusters, as well as the tools (kubectl and helm).

I want to run this from a system that isn’t one the HCI cluster nodes, as I wanted to test a ‘real life’ scenario. I wouldn’t want to be installing tools like helm on production HCI servers, although it’s fine for kicking the tires.

Mainly I’m going to show how I’ve automated the installation of the tools, the onboarding process for the cluster to Azure Arc, and also deploying Container Insights, so the AKS HCI clusters can be monitored.

TL;DR - jump here to get the script and what configuration steps you need to do to run it

Here’s the high-level steps:

Install the Az PoSh modules

Connect to a HCI cluster node that has the AksHCI PoSh module deployed (where you ran the AKS HCI deployment from)

Copy the kubectl binary from the HCI node to your Win 10 system

Install Chocolatey (if not already installed)

Install Helm via Choco

Get the latest Container Insights deployment script

Get the config files for all the AKS HCI clusters deployed to the HCI cluster

Onboard the cluster to Arc if not already completed

Deploy the Container Insights solution to each of the clusters

Assumptions

connectivity to the Internet.

Steps 1 - 5 of the Arc for Kubernetes onboarding have taken place and the service principal has required access to carry out the deployment. Detailed instructions are here

You have already deployed one or more AKS HCI clusters.

Install the Az PoSh Modules

We use the Az module to run some checks that the cluster has been onboarded to Arc. The enable-monitoring.ps1 script requires these modules too.

Connect to a HCI Node that has the AksHci PowerShell module deployed

I’m making the assumption that you will have already deployed your AKS HCI cluster via PowerShell, so one of the HCI cluster nodes already has the latest version of the AksHci PoSh module installed. Follow the instructions here if you need guidance.

In the script I wrote, the remote session is stored as a variable and used throughout

Copy the kubectl binary from the HCI node to your Win 10 system

I make it easy on myself by copying the kubectl binary that’s installed as part of the AKS HCI deployment on the HCI cluster node. I use the stored session details to do this. I place it in a directory called c:\wssd on my workstation as it matches the AKS HCI deployment location.

Install Chocolatey

The recommended way to install Helm on Windows is via Chocolatey, per https://helm.sh/docs/intro/install/, hence the need to install Choco. You can manually install it via https://chocolatey.org/install.ps1, but my script does it for you.

Install Helm via Choco

Once Choco is installed, we can go and grab helm by running:

choco install kubernetes-helm -yGet the latest Container Insights deployment script

Microsoft have provided a PowerShell script to enable monitoring of Arc managed K8s clusters here.

Full documentation on the steps are here.

Get the config files for all the AKS HCI clusters deployed to the HCI cluster

This is where we use the AksHci module to obtain the config files for the clusters we have deployed. First, we get a list of all the deployed AKS HCI clusters with this command:

get-akshciclusterThen we iterate through those objects and get the config file so we can connect to the Kubernetes cluster using kubectl. Here’s the command:

get-akshcicredential -clustername $AksHciClusternameOnboard the cluster to Arc if not already completed

First, we check to see if the cluster is already onboarded to Arc. We construct the resource Id and then use the Get-AzResource command to check. If the resource doesn’t exist, then we use the Install-AksHciArcOnboarding cmdlet to get the cluster onboarded to our desired subscription, region and resource group.

$aksHciCluster = $aksCluster.Name

$azureArcClusterResourceId = "/subscriptions/$subscriptionId/resourceGroups/$resourceGroup/providers/Microsoft.Kubernetes/connectedClusters/$aksHciCluster"

#Onboard the cluster to Arc

$AzureArcClusterResource = Get-AzResource -ResourceId $azureArcClusterResourceId

if ($null -eq $AzureArcClusterResource) {

Invoke-Command -Session $session -ScriptBlock { Install-AksHciArcOnboarding -clustername $using:aksHciCluster -location $using:location -tenantId $using:tenant -subscriptionId $using:subscriptionId -resourceGroup $using:resourceGroup -clientId $using:appId -clientSecret $using:password }

# Wait until the onboarding has completed...

. $kubectl logs job/azure-arc-onboarding -n azure-arc-onboarding --follow

}Deploy the Container Insights solution to each of the clusters

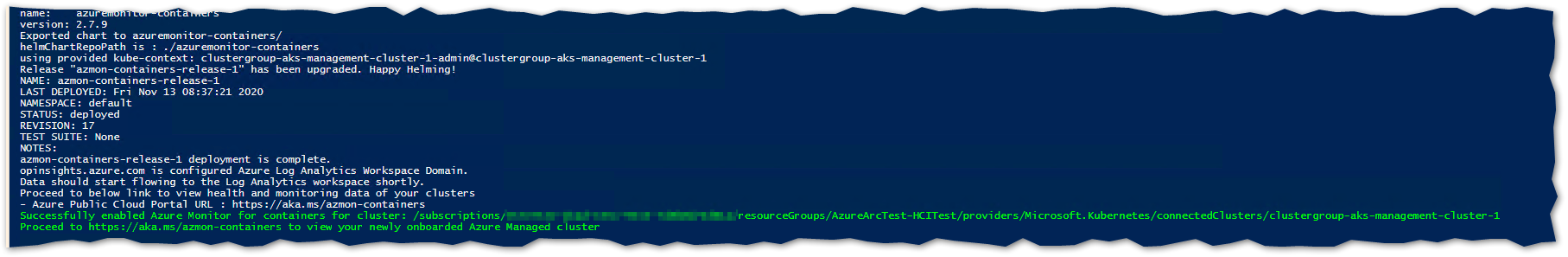

Finally, we use the enable-monitoring.ps1 script with the necessary parameters to deploy the Container Insights solution to the Kubernetes cluster.

NOTE

At the time of developing the script, I found that I had to edit the veriosn of enable-monitoring.ps1 that was downloaded, as the helm chart version defined (2.7.8) was not available. I changed this to 2.7.7 and it worked.

The current version of the script script on GitHub is now set to 2.7.9, which works.

If you do find there are issues, it is worth trying a previous version, as I did.

You want to look for where the variable $mcrChartVersion is set (line 63 in the version I downloaded) and change to:

$mcrChartVersion = "2.7.7"Putting It Together: The Script

With the high level steps described, go grab the script.

You’ll need to modify it once downloaded to match your environment. The variables you need to modify are listed below and are at the beginning of the script. (I didn’t get around to parameterizing it; go stand in the corner, Danny! :) )

$hcinode = '<hci-server-name>'

$resourceGroup = "<Your Arc Resource Group>"

$location = "<Region of resource>"

$subscriptionId = "<Azure Subscription ID>"

$appId = "<App ID of Service Principal>"

$password = "<Ap ID Secret>"

$tenant = "<Tenant ID for Service Principal>"Hopefully it’s clear enough that you’ll need to have created a Service Principal in your Azure Sub, providing the App Id, Secret and Tenant Id. You also need to provide the Subscription of the Azure Sub you are connecting Arc to as well as the Resource Group name. If you’re manually creating a Service principal, make sure it has rights to the Resource Group (e.g. Contributor)

Reminder

Follow Steps 1 - 5 in the following doc to ensure the pre-reqs for Arc onboarding are in place. https://docs.microsoft.com/en-us/azure-stack/aks-hci/connect-to-arc

When the script is run, it will retrieve all the AKS HCI clusters you have deployed and check they are onboarded to Arc. If not , it will go ahead and do that. Then it will retrieve the kubeconfig file, store it locally and add the path to the file to the KUBECONFIG environment variable. Lastly, it will deploy the Container Insights monitoring solution.

Here is an example of the Arc onboarding logs

and here is confirmation of successful deployment of the Container Insights for Containers solution to the cluster.

What you will see in the Azure Portal for Arc managed K8s clusters:

Before onboarding my AKS HCI clusters…

..and after

Here’s an example of what you will see in the Azure Portal when the Container Insights solution is deployed to the cluster, lots of great insights and information are surfaced:

On my local system, I can administer my clusters now using Kubectl and Helm. Here’s an example that shows that I have multiple clusters in my config and has specific contexts :

The config is derived from the KUBECTL environment variable. Note how the config files I retrieved are explicitly stated:

I’m sure that as AKS HCI matures, more elegant solutions to enable remote management and monitoring will be available, but in the meantime, I’m pretty pleased that I achieved what I set out to do.